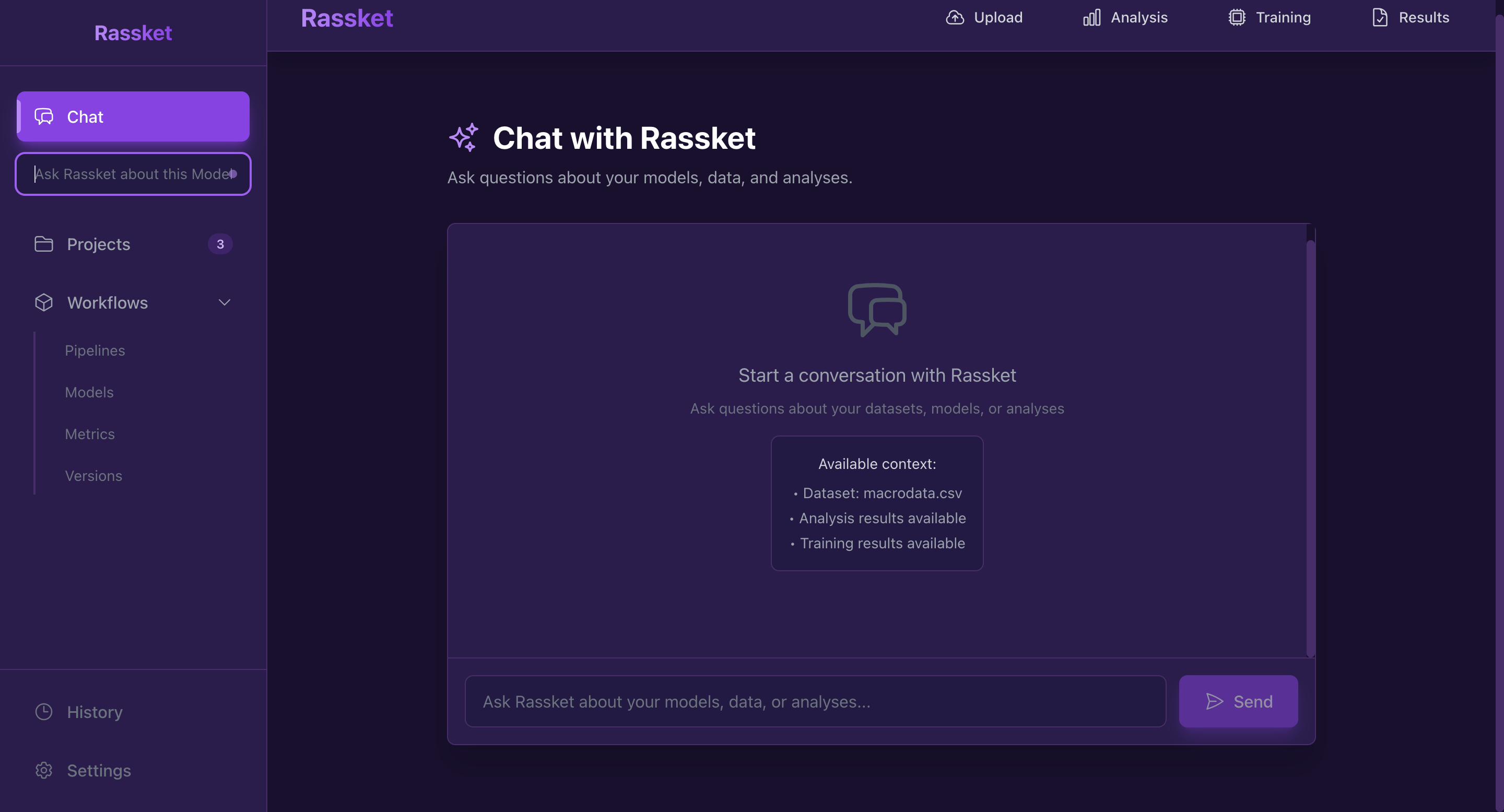

Dashboard Walkthrough

This comprehensive guide walks you through each step of the Rassket dashboard, mapping directly to the UI workflow. Follow along as you use the platform to understand what happens at each stage.

Step 1: Data Ingestion

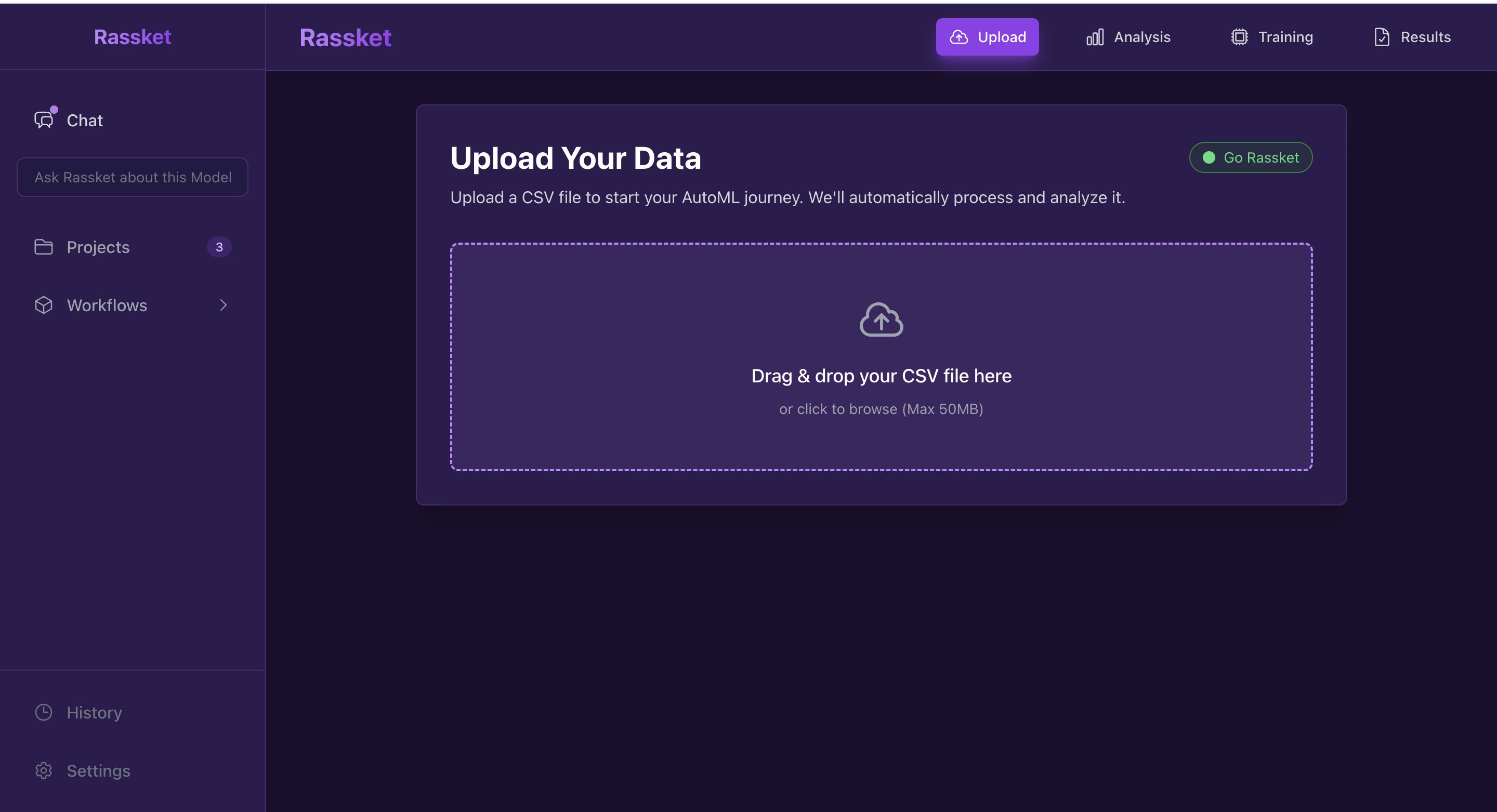

What You See

- Upload area with drag-and-drop interface

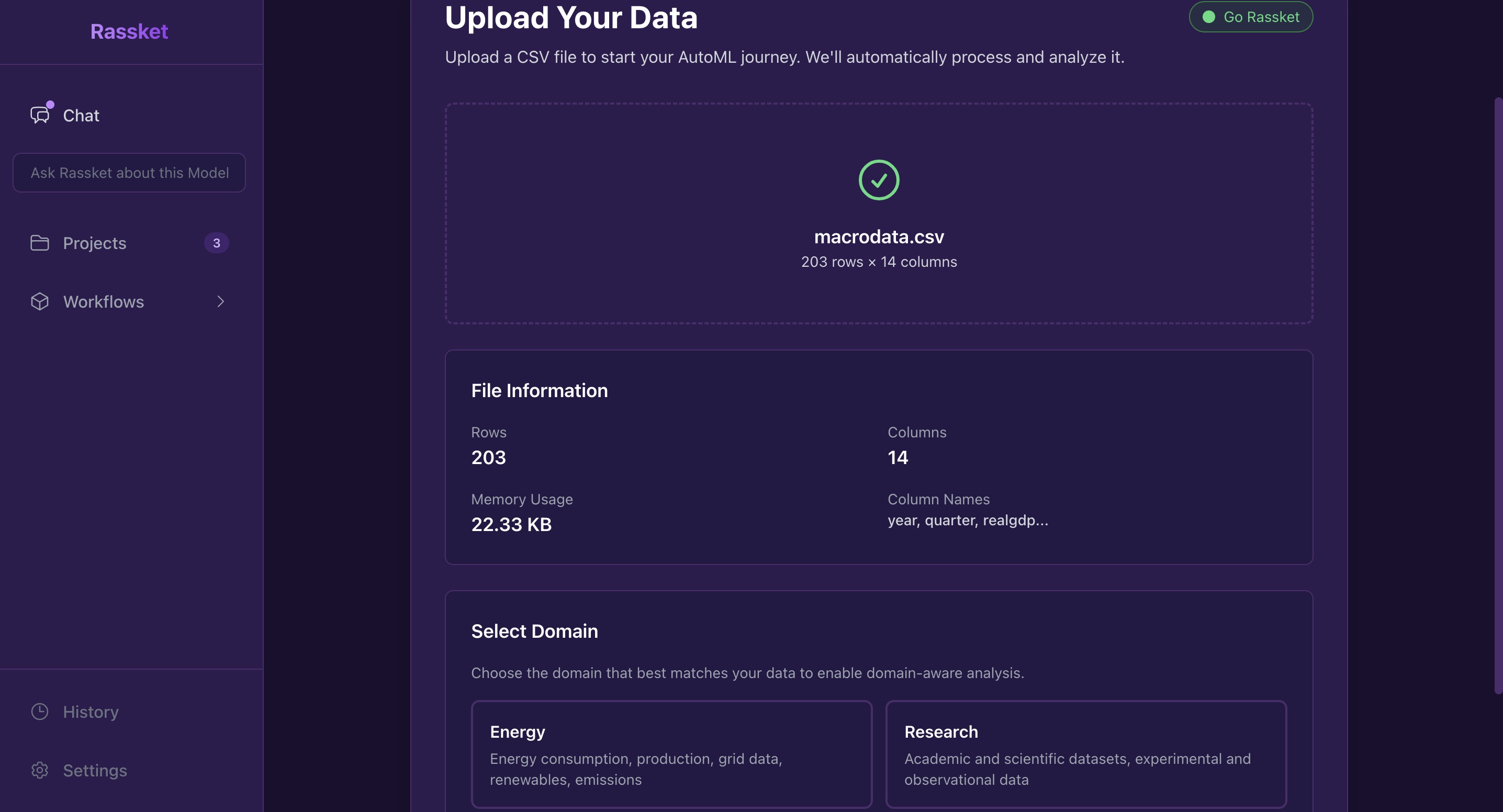

- File information display (rows, columns, memory usage)

- Domain selection interface

- Preprocessing status indicator

What the System Does

- File Upload: Validates CSV format and size (max 50MB)

- Schema Detection: Analyzes column names, data types, and structure

- Quality Assessment: Detects missing values, duplicates, and data issues

- File Storage: Saves uploaded file for processing

Why This Step Matters

Data ingestion is the foundation of your ML pipeline. Proper validation ensures downstream processes work correctly. Rassket handles all validation automatically, catching common issues before they cause problems.

Upload your CSV file using drag-and-drop or click to browse

File information shows rows, columns, and memory usage

After upload, file details are displayed

Step 2: Domain Selection

What You See

- Two domain options: Energy and Research

- Optional sub-domain selection

- Preprocessing progress indicator

- Success confirmation when preprocessing completes

What the System Does

- Domain Selection: You choose Energy or Research domain

- Sub-Domain Selection (Optional): Refine to specific use case

- Domain-Aware Preprocessing: Applies specialized feature engineering

- Feature Generation: Creates domain-specific features automatically

Why This Step Matters

Domain selection enables specialized feature engineering that improves model accuracy and generates domain-appropriate explanations. This is what makes Rassket different from generic AutoML tools.

See Domain Selection for detailed information about domains and sub-domains.

Choose between Energy and Research domains

Optionally select a sub-domain for refined analysis

Domain-aware preprocessing applies specialized feature engineering

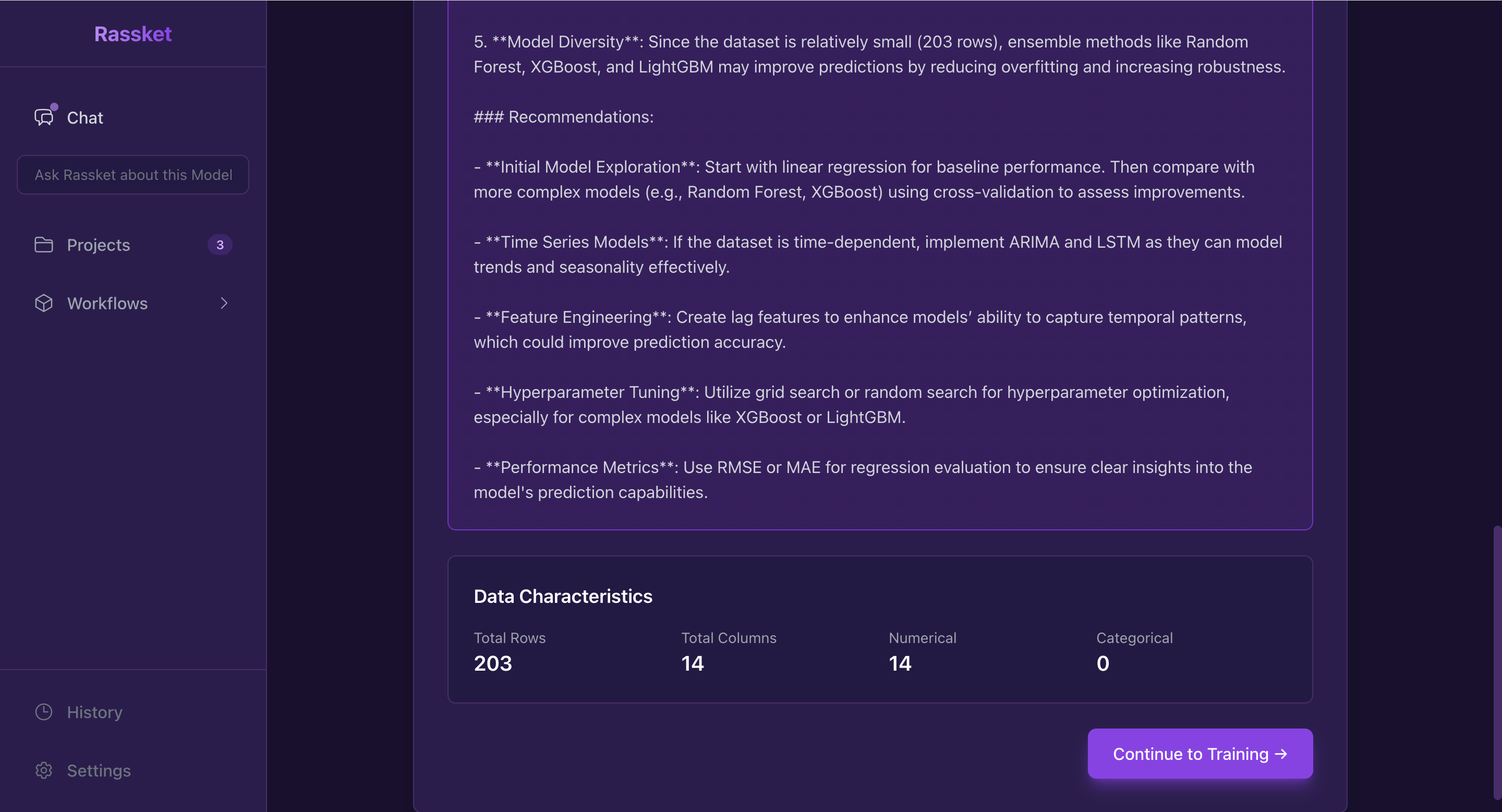

Step 3: Schema Understanding & Analysis

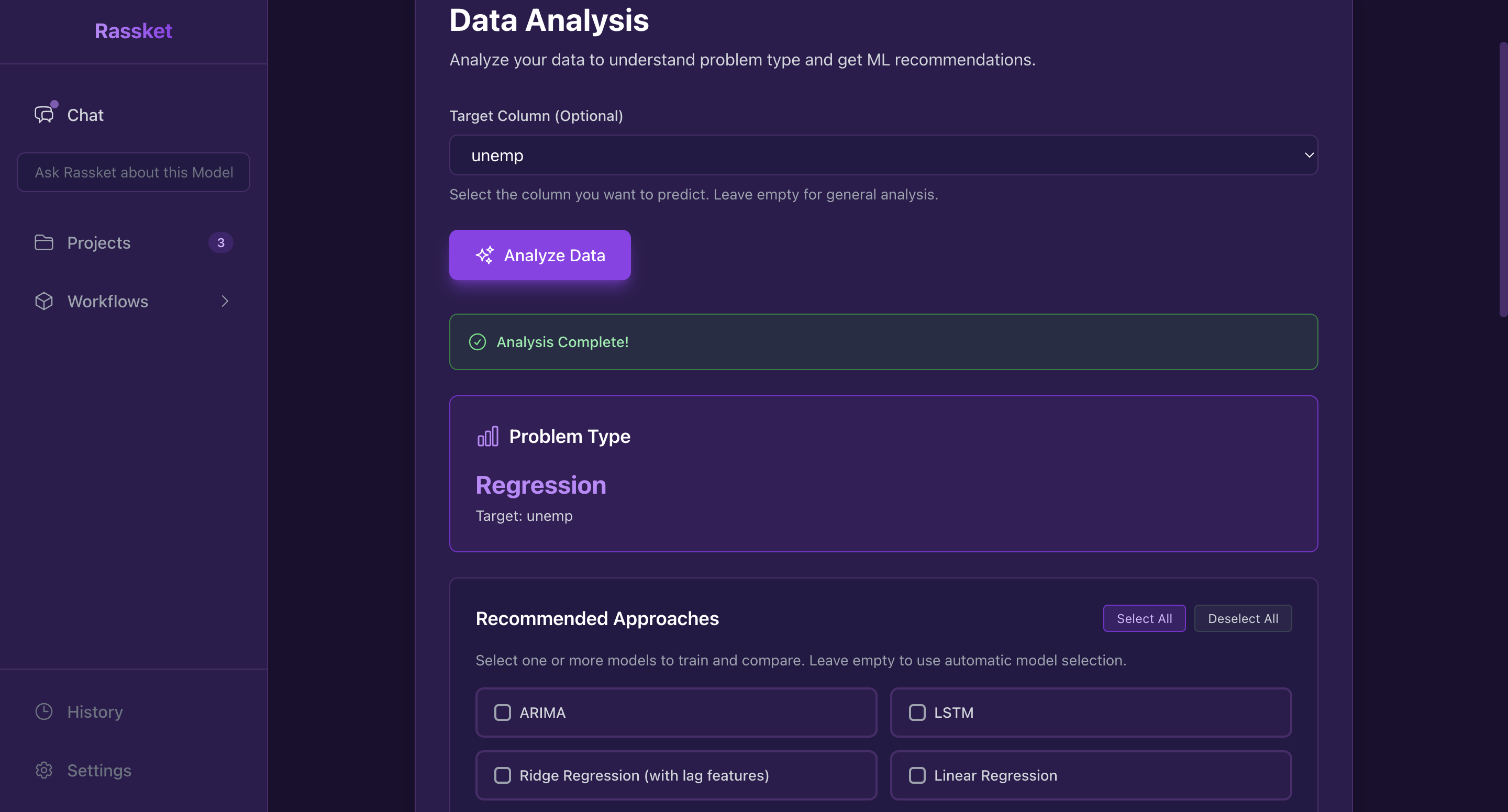

What You See

- Target column selector (dropdown)

- "Analyze Data" button

- Problem type detection result

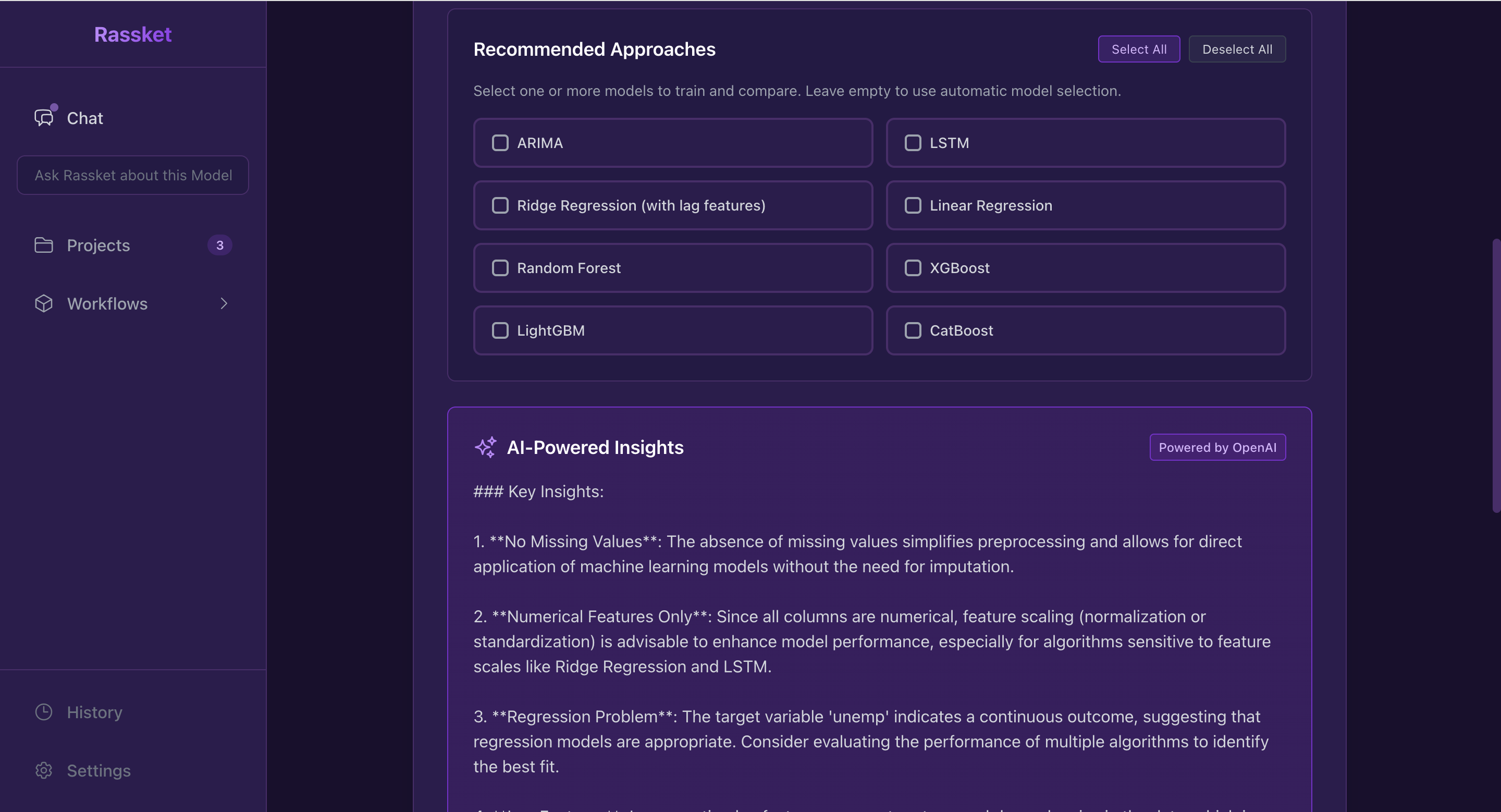

- Recommended ML approaches (with selection checkboxes)

- AI-powered insights (if OpenAI is configured)

- Data characteristics summary

What the System Does

- Target Column Selection: You optionally select which column to predict

- Problem Type Detection: Automatically detects Regression, Binary Classification, or Multi-class Classification

- Model Recommendations: Suggests suitable ML approaches based on your data

- Data Analysis: Analyzes data characteristics (rows, columns, types, distributions)

- AI Insights: Generates domain-aware insights about your data (if enabled)

Why This Step Matters

Understanding your problem type ensures Rassket selects appropriate models and evaluation metrics. The analysis phase helps you understand your data before training begins.

Model Selection

You can:

- Select Specific Models: Choose which models to train and compare

- Select All: Train all recommended models

- Deselect All: Let Rassket automatically choose the best model

Select target column and analyze your data

Automatic problem type detection and model recommendations

Choose specific models to train or use automatic selection

Step 4: Feature Engineering

What Happens (Behind the Scenes)

Before model training, Rassket automatically performs feature engineering:

- Missing Value Imputation: Handles missing data appropriately for your domain

- Feature Creation: Generates domain-specific features

- Encoding: Converts categorical variables to numeric

- Scaling: Normalizes features for model compatibility

- Feature Selection: Identifies and removes redundant features

Domain-Specific Engineering

Based on your domain selection:

- Energy: Temporal features, lag variables, rolling statistics, peak indicators

- Research: Statistical transformations, interaction terms, experimental features

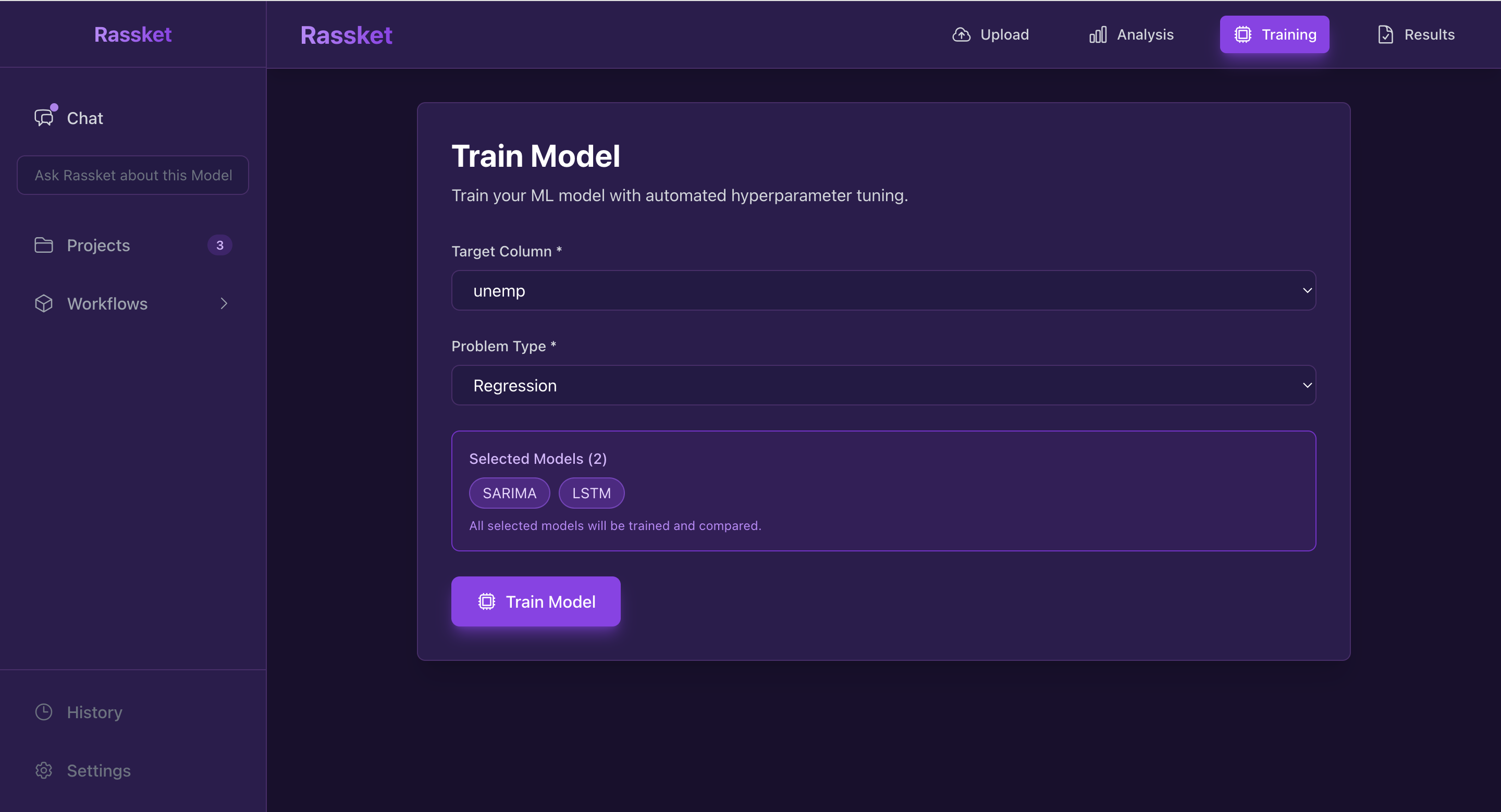

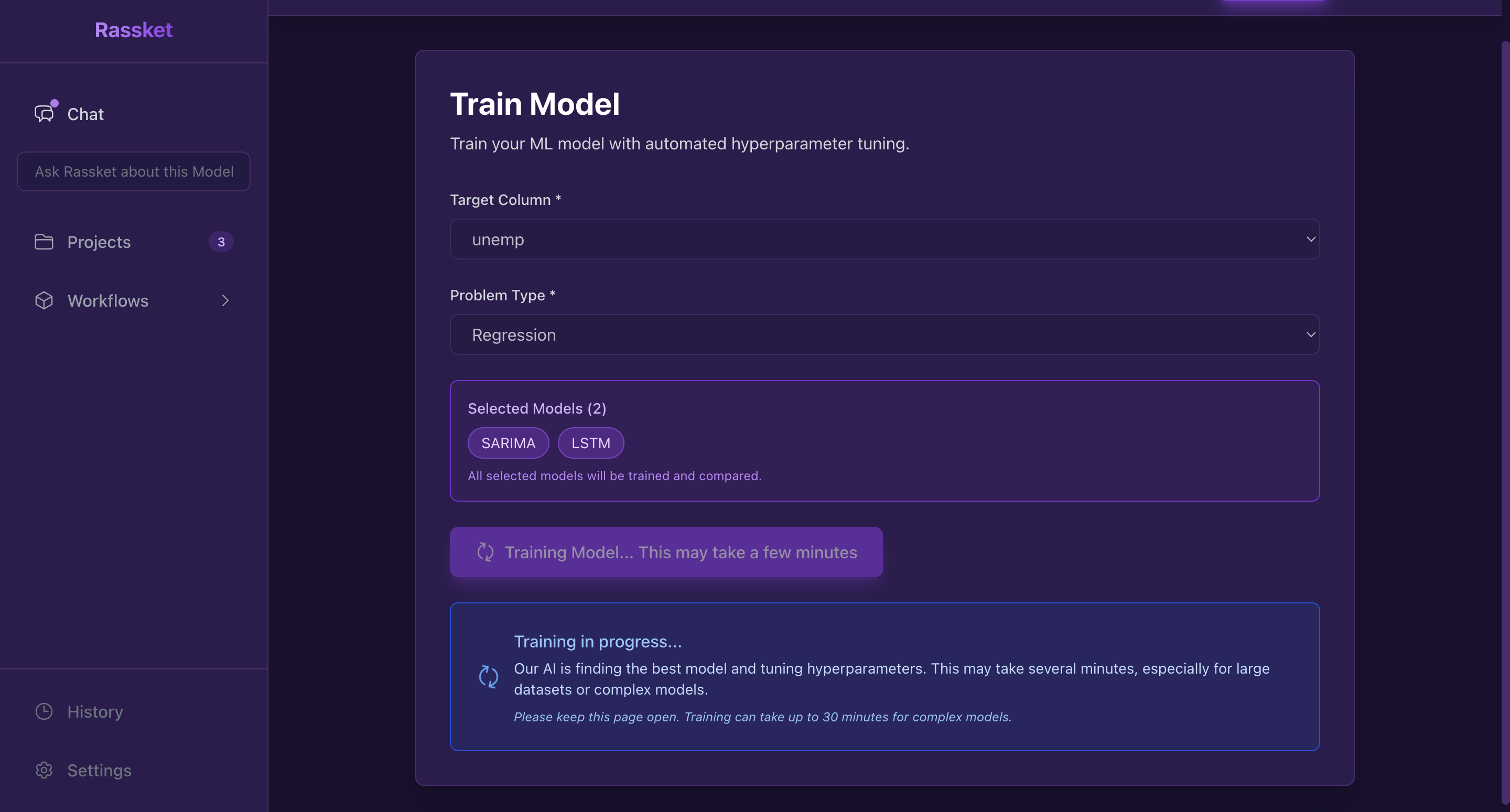

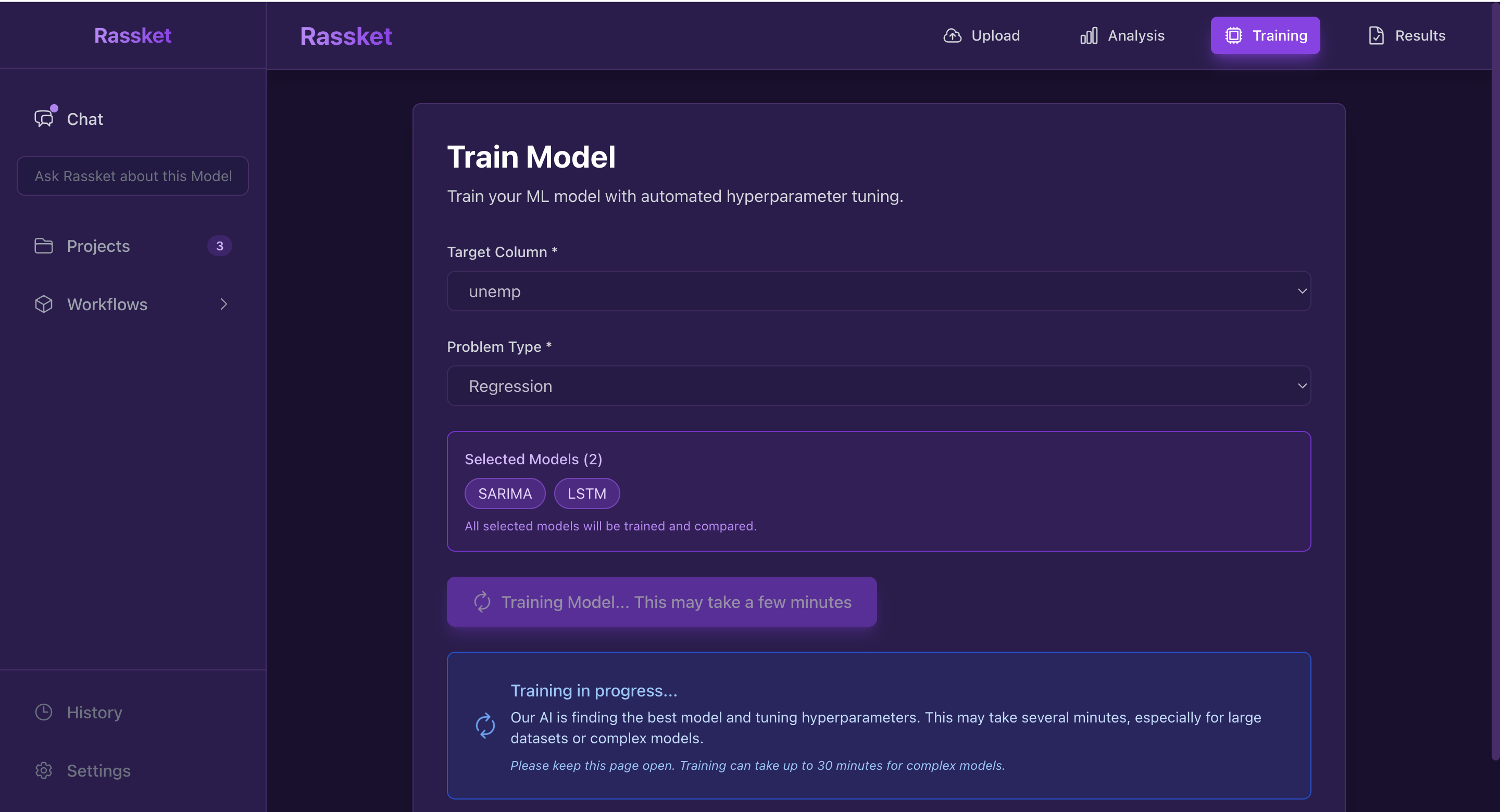

Step 5: Model Training (AutoML)

What You See

- Target column selector (required for training)

- Problem type selector (Regression, Binary Classification, Multi-class Classification)

- Selected models display (if you selected specific models)

- "Train Model" button

- Training progress indicator

- Training results summary

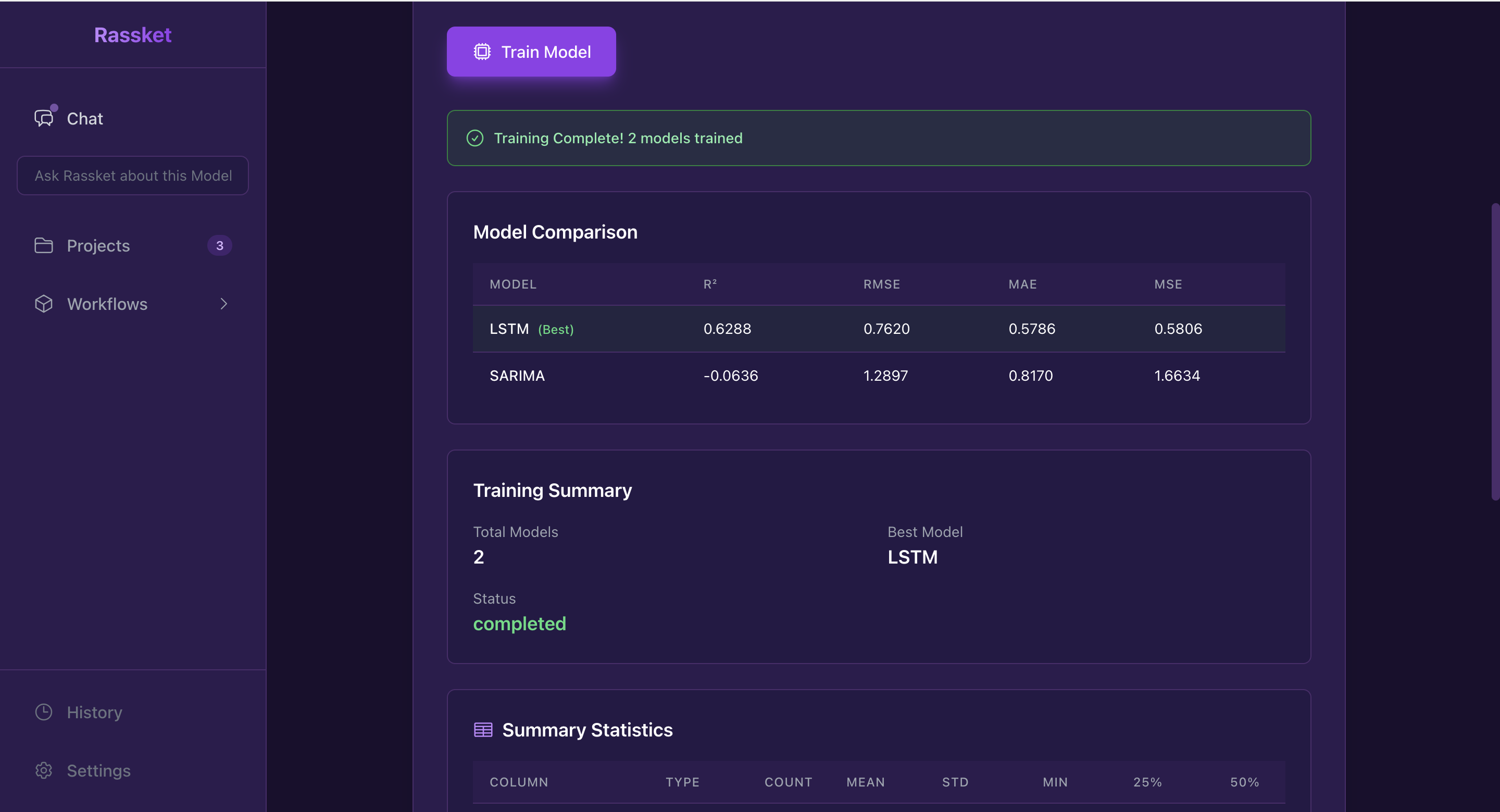

- Model comparison table (if multiple models trained)

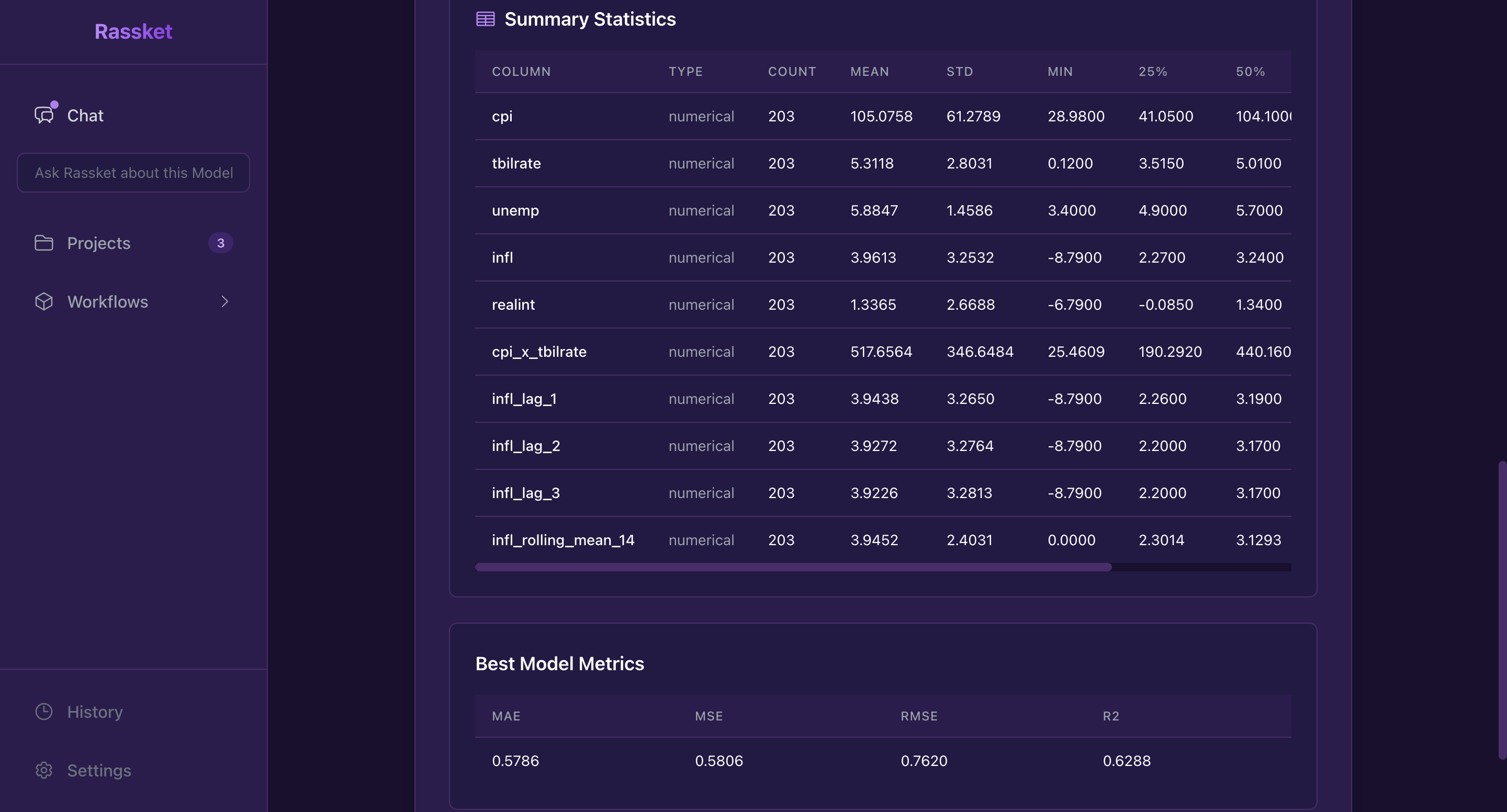

- Summary statistics table

- AI explanation of results

What the System Does

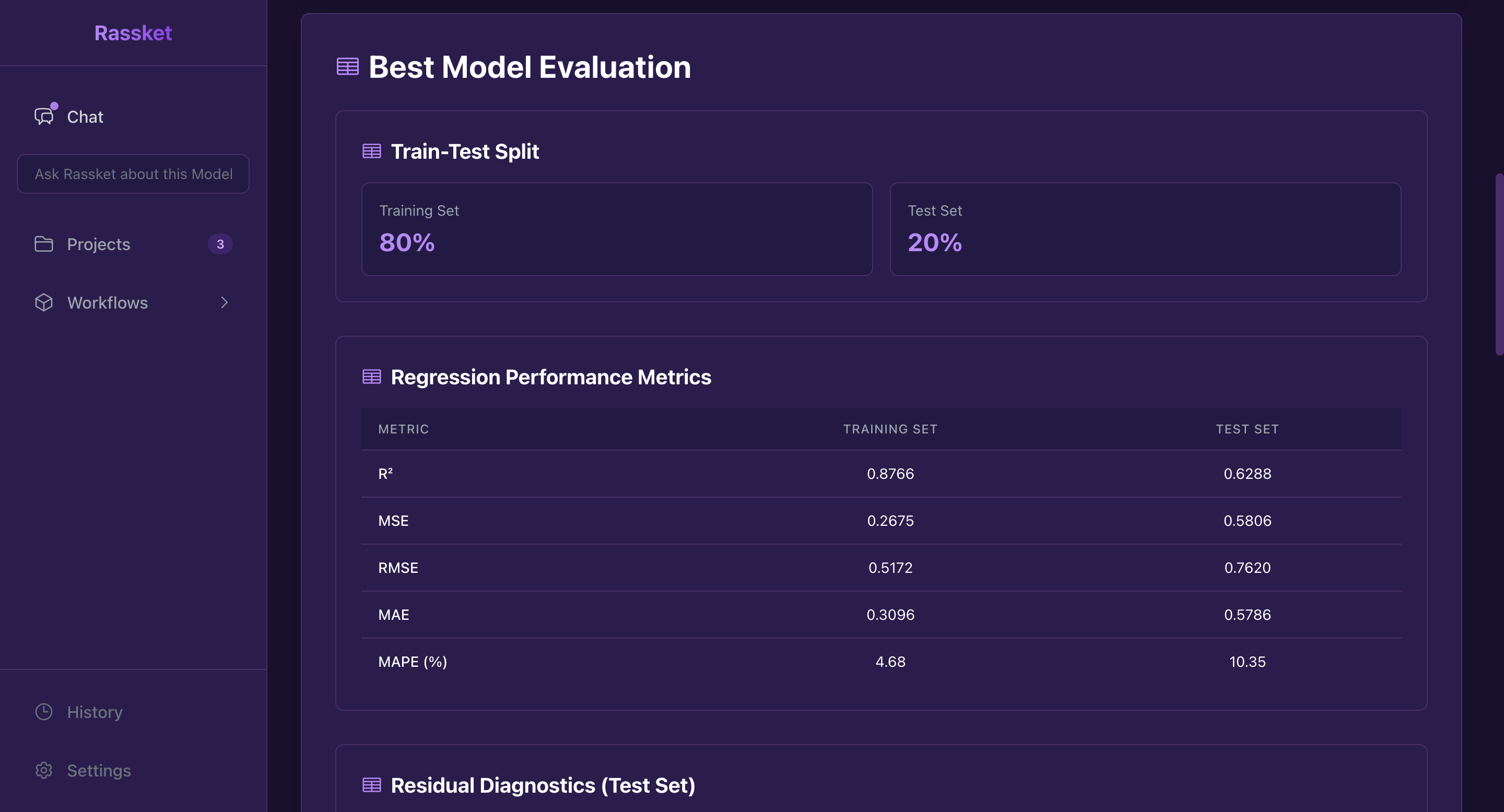

- Data Splitting: Automatically splits data into train/validation/test sets

- Model Selection: Selects models based on your choices or automatically

- Hyperparameter Tuning: Uses Optuna to optimize hyperparameters for each model

- Model Training: Trains each selected model

- Model Comparison: Compares performance across models

- Best Model Selection: Identifies the best-performing model

- Comprehensive Evaluation: Generates detailed metrics and diagnostics

Why This Step Matters

This is where Rassket's AutoML engine shines. It automatically handles:

- Model selection from a library of algorithms

- Hyperparameter optimization (no manual tuning needed)

- Cross-validation for robust evaluation

- Model comparison to find the best performer

Training Results

After training completes, you'll see:

- Model ID: Unique identifier for your trained model

- Model Type: The algorithm used (XGBoost, LightGBM, CatBoost, etc.)

- Status: Training completion status

- Metrics: Performance metrics (R², RMSE, MAE for regression; Accuracy, Precision, Recall, F1 for classification)

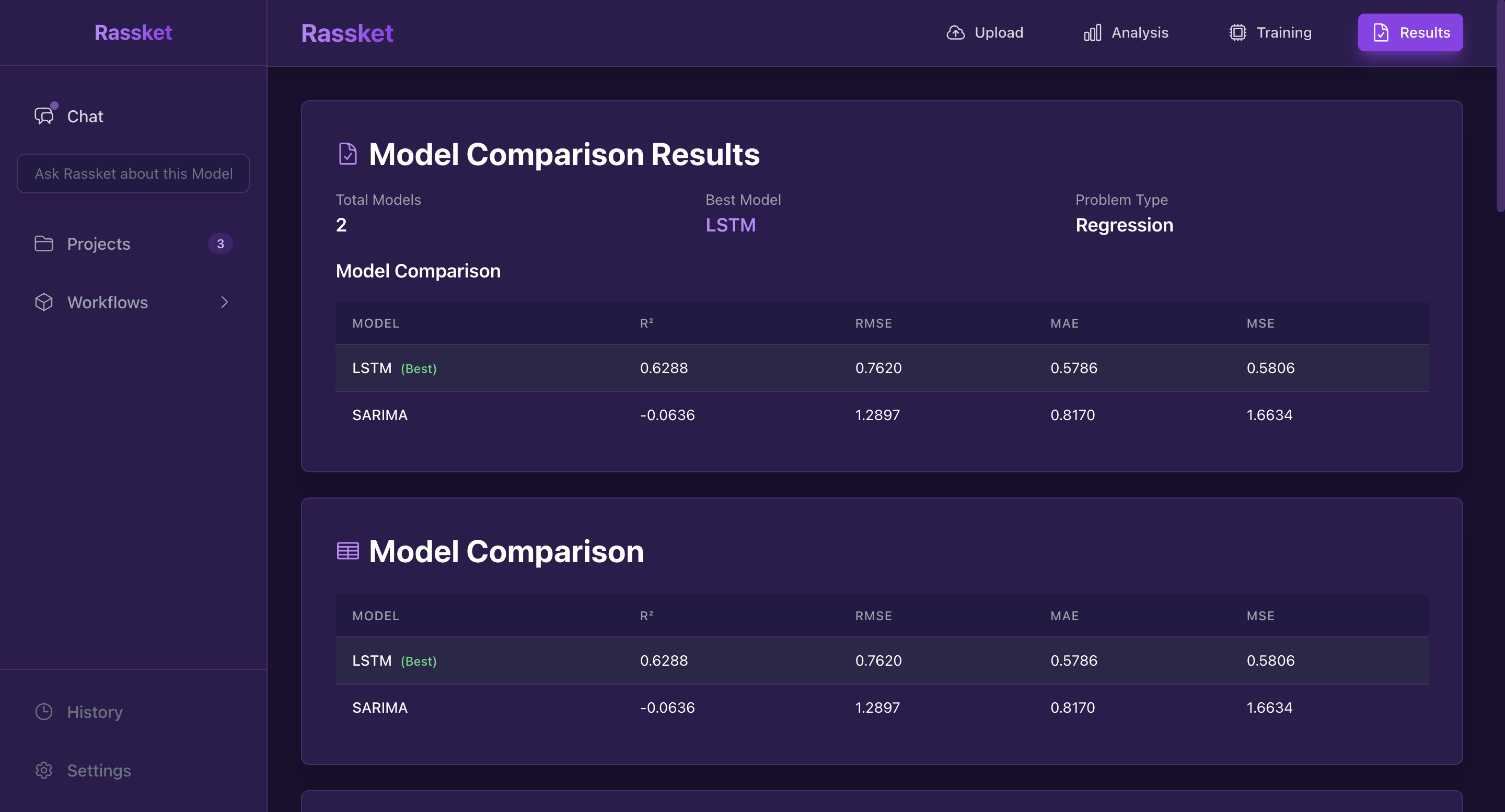

- Comparison Table: Side-by-side comparison if multiple models were trained

Configure target column and problem type for training

Watch real-time training progress

View training summary with model ID and metrics

Compare multiple models side-by-side

Detailed evaluation metrics and statistics

AI-generated insights about model performance

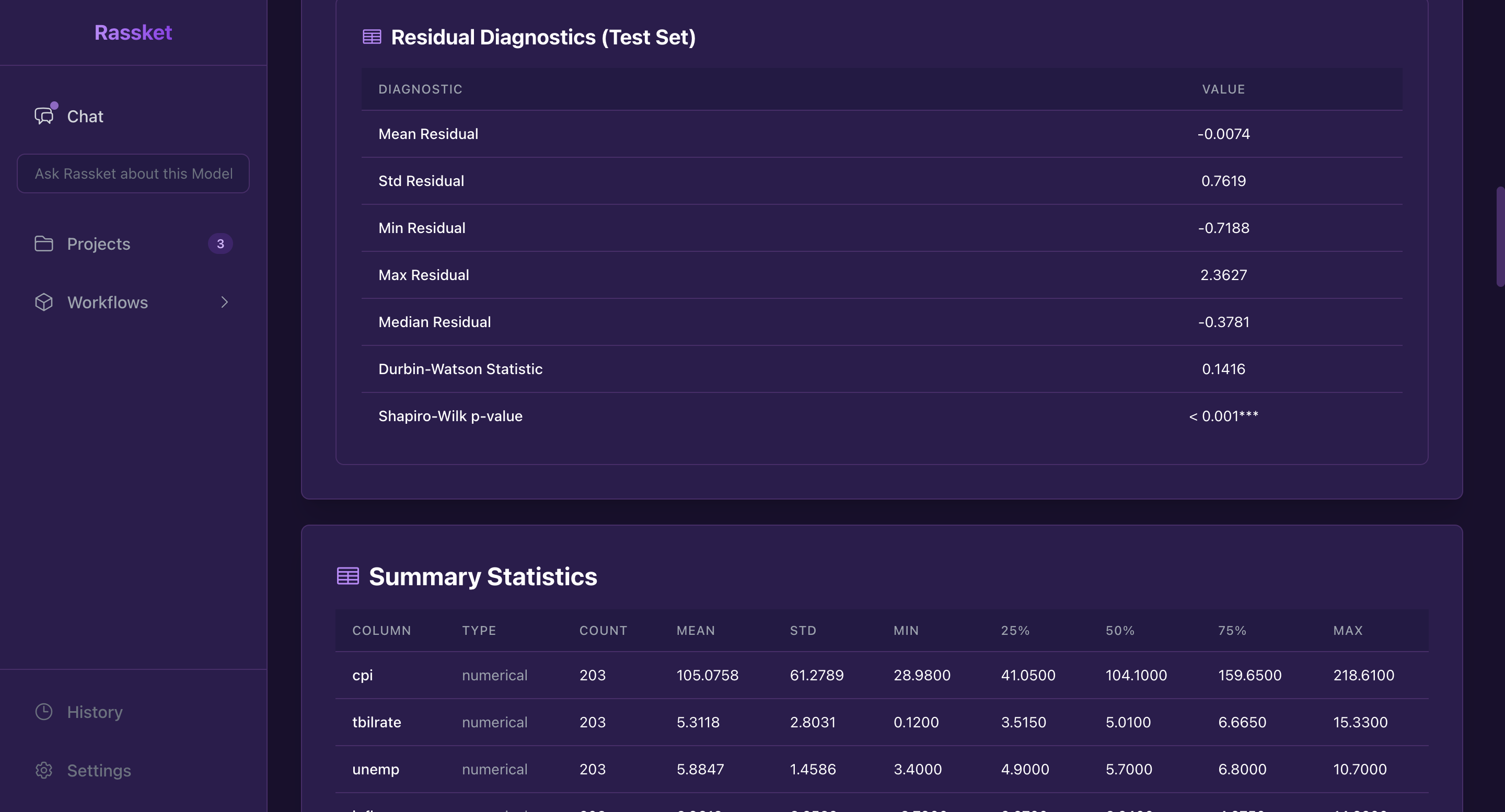

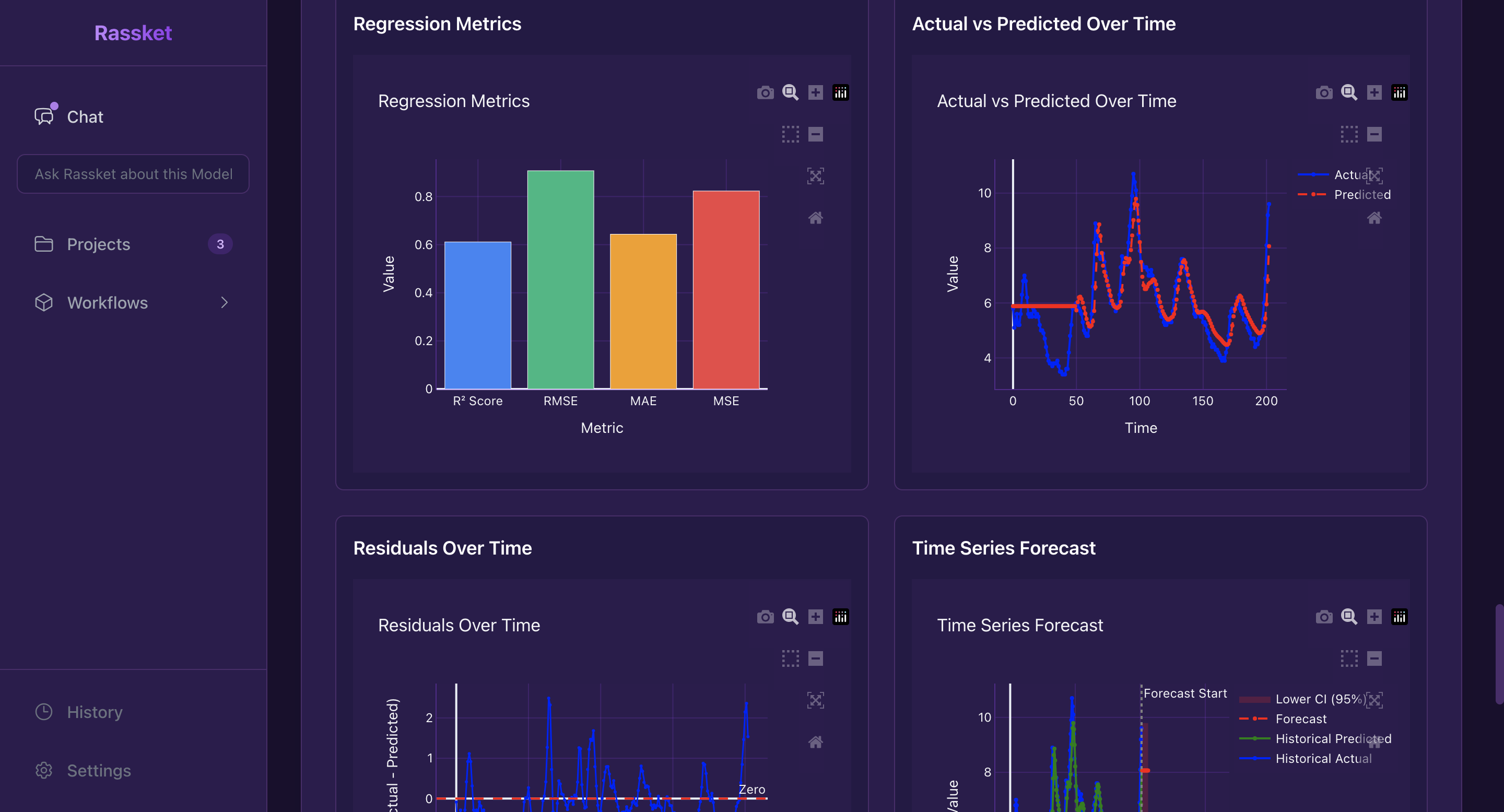

Step 6: Evaluation & Metrics

What You See

- Comprehensive metrics tables

- Model comparison (if multiple models)

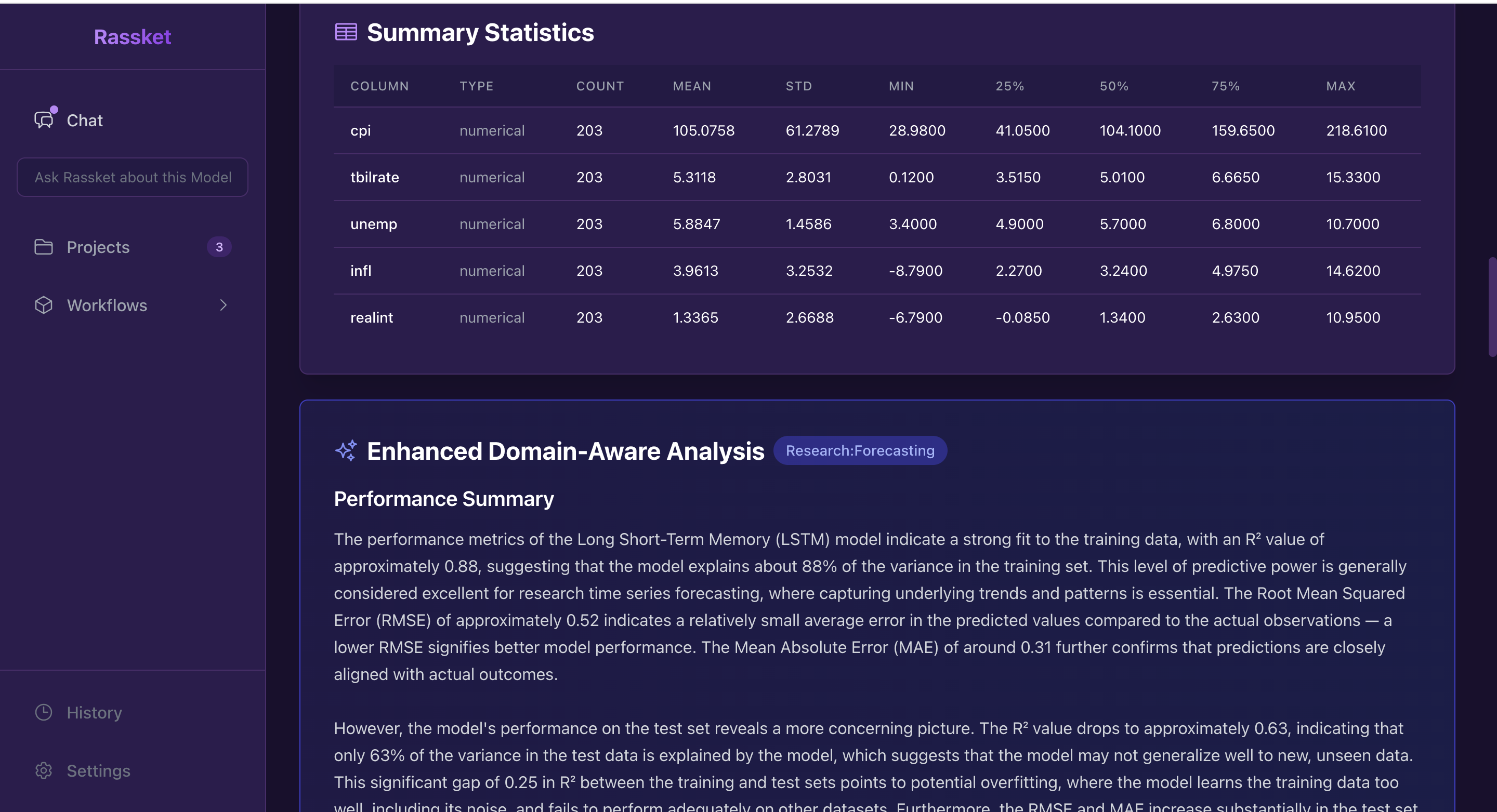

- Summary statistics for all features

- AI-powered analysis of results

- Enhanced domain-aware analysis (if domain selected)

What the System Does

- Metric Calculation: Computes comprehensive evaluation metrics

- Model Diagnostics: Performs statistical diagnostics (for regression)

- Feature Importance: Calculates SHAP values for interpretability

- Domain Analysis: Generates domain-aware explanations (if domain provided)

- Reliability Assessment: Evaluates model reliability and potential issues

Evaluation Metrics

For Regression Problems

- R² (R-squared): Proportion of variance explained

- RMSE: Root Mean Squared Error

- MAE: Mean Absolute Error

- MSE: Mean Squared Error

For Classification Problems

- Accuracy: Overall correctness

- Precision: True positives / (True positives + False positives)

- Recall: True positives / (True positives + False negatives)

- F1 Score: Harmonic mean of precision and recall

Why This Step Matters

Comprehensive evaluation ensures you understand:

- How well your model performs

- Which features are most important

- Potential issues or limitations

- How to interpret results in your domain context

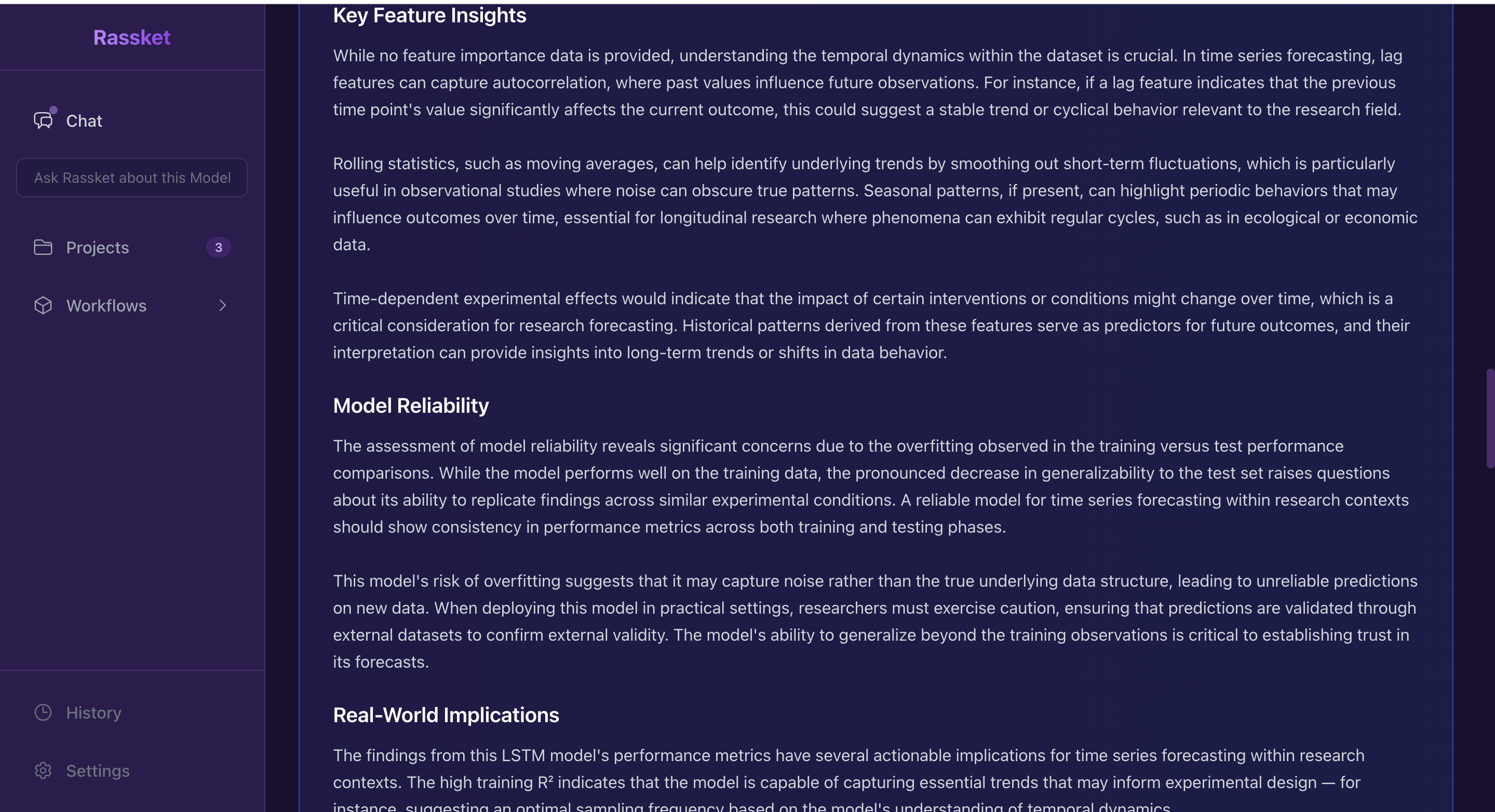

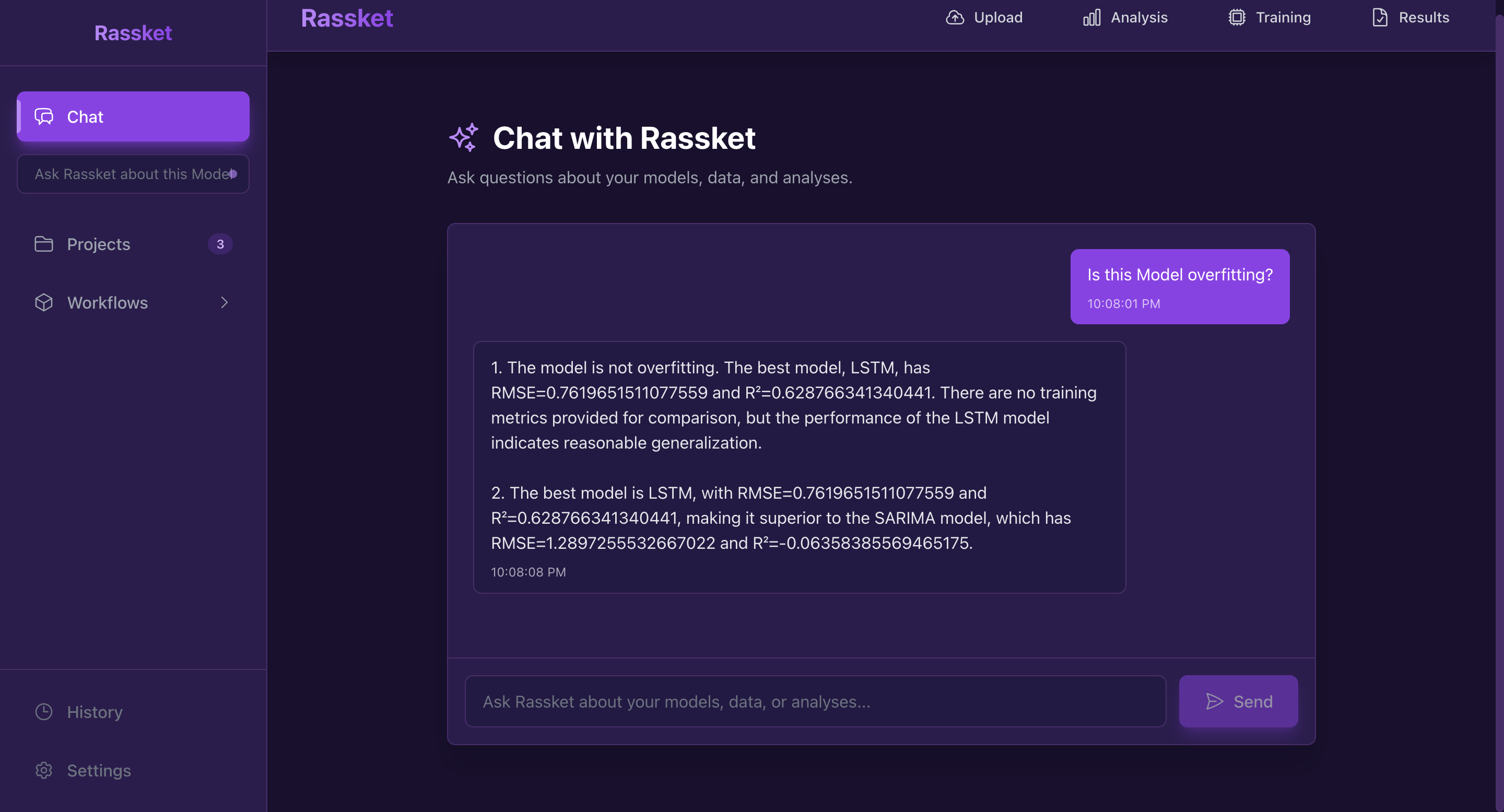

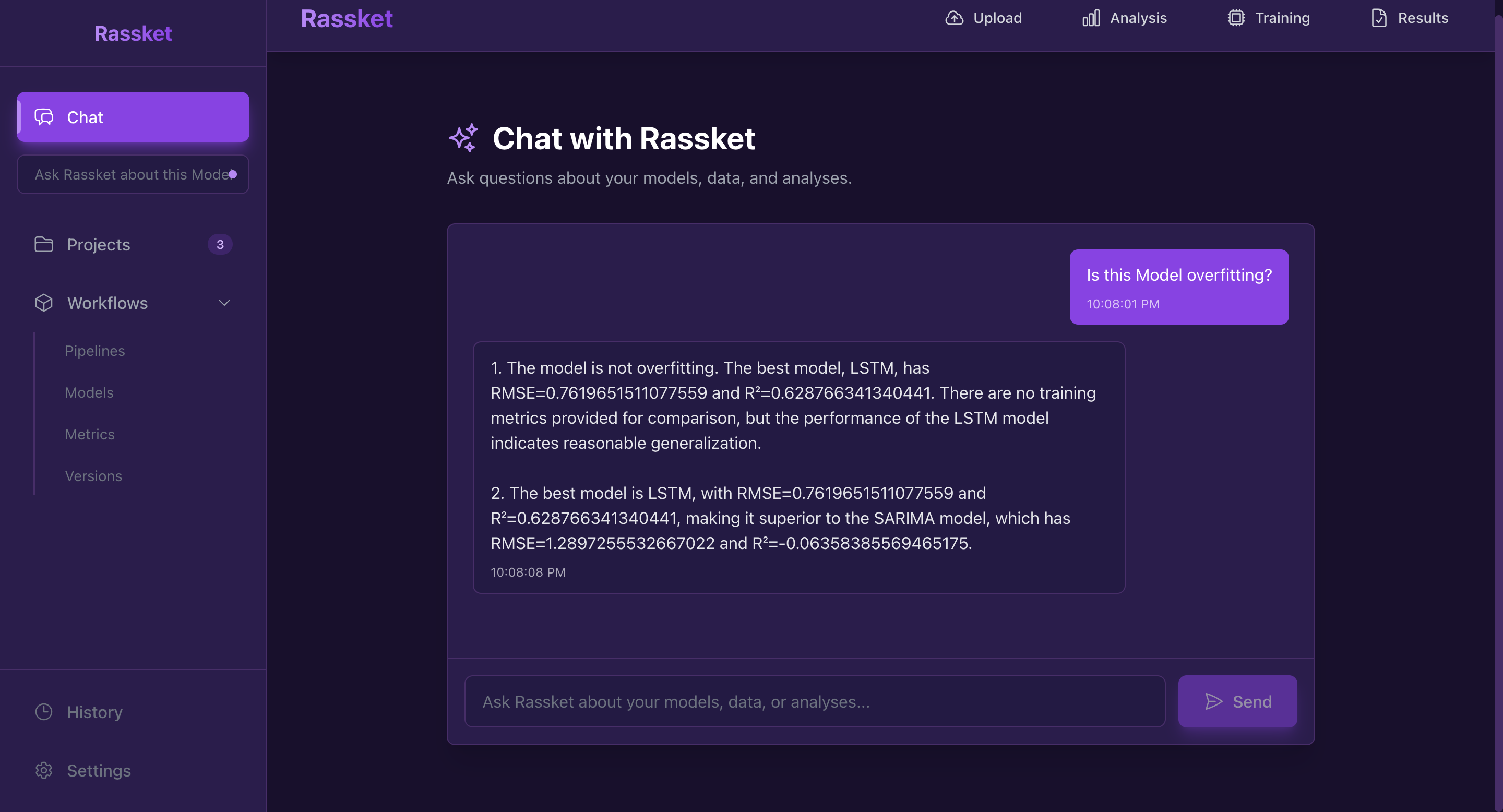

Step 7: Insights and Explanations

What You See

- AI-powered insights about model performance

- Enhanced domain-aware analysis (if domain selected)

- Feature importance visualizations

- Model reasoning panel

- Real-world implications

What the System Does

- Performance Summary: Explains model performance in plain language

- Feature Insights: Identifies which features matter most and why

- Model Reliability: Assesses model confidence and potential issues

- Domain Context: Provides domain-specific interpretations

- Real-World Implications: Explains how to use predictions in decision-making

Types of Insights

Standard AI Analysis

Generic analysis of model performance, metrics, and feature importance. Available when OpenAI is configured.

Enhanced Domain-Aware Analysis

When a domain is selected, Rassket provides:

- Performance Summary: Domain-contextualized performance assessment

- Key Feature Insights: Domain-specific feature interpretations

- Model Reliability: Statistical reliability assessment

- Real-World Implications: How to use predictions in your domain

Why This Step Matters

Insights transform raw metrics into actionable intelligence. They help you:

- Understand what your model learned

- Identify important patterns in your data

- Make informed decisions based on predictions

- Communicate results to stakeholders

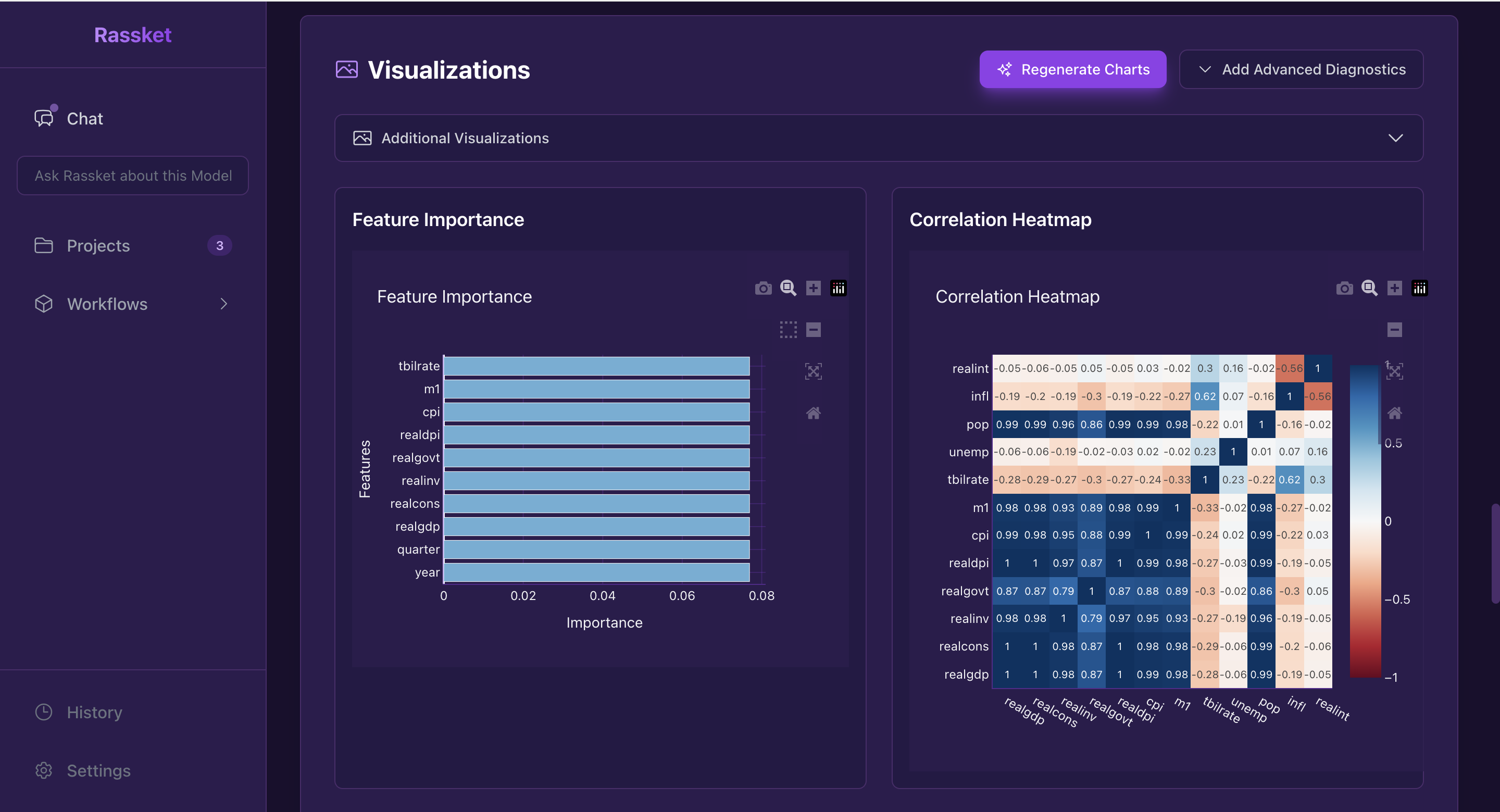

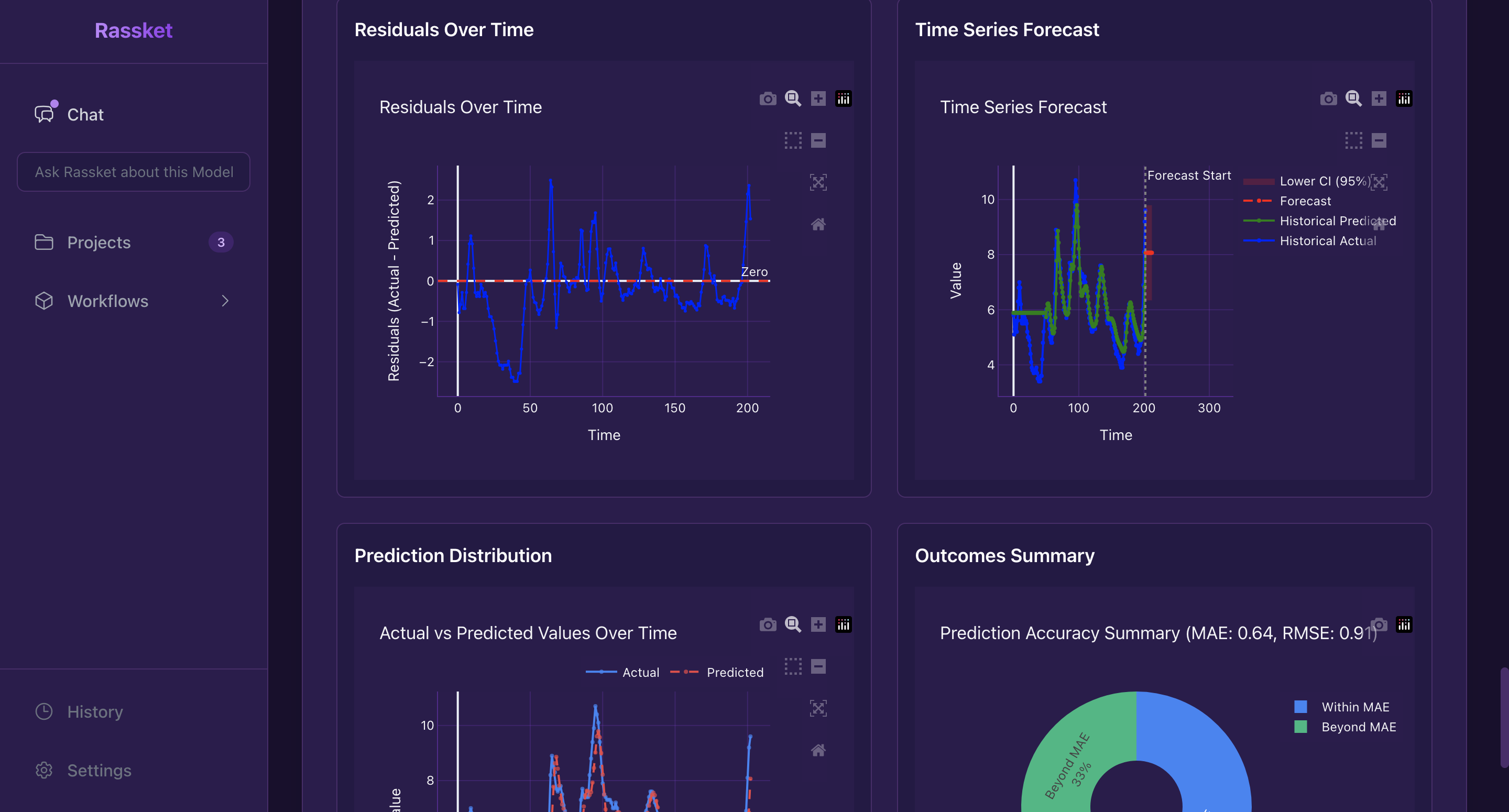

Step 8: Visualizations

What You See

- Feature importance charts

- Confusion matrices (for classification)

- ROC curves (for classification)

- Prediction distribution plots

- Correlation heatmaps

- Regression/classification metrics visualizations

- Advanced diagnostics (optional)

What the System Does

- Automatic Visualization: Generates default visualizations after training

- Chart Generation: Creates interactive Plotly charts

- Advanced Diagnostics: Optional additional charts (residual diagnostics, calibration curves, etc.)

- Chart Regeneration: Allows regenerating charts with different options

Default Visualizations

- Feature Importance: Shows which features contribute most to predictions

- Confusion Matrix: Classification accuracy breakdown (classification only)

- ROC Curve: Classification performance visualization (classification only)

- Prediction Distribution: Distribution of predicted values

- Metrics Charts: Visual representation of evaluation metrics

- Correlation Heatmap: Feature correlation matrix

Advanced Diagnostics

Optional additional visualizations:

- Residual Diagnostics: Q-Q plots, residuals vs fitted, scale-location (regression)

- R² Indicators: Goodness-of-fit visualizations (regression)

- Calibration Curve: Prediction probability calibration (classification)

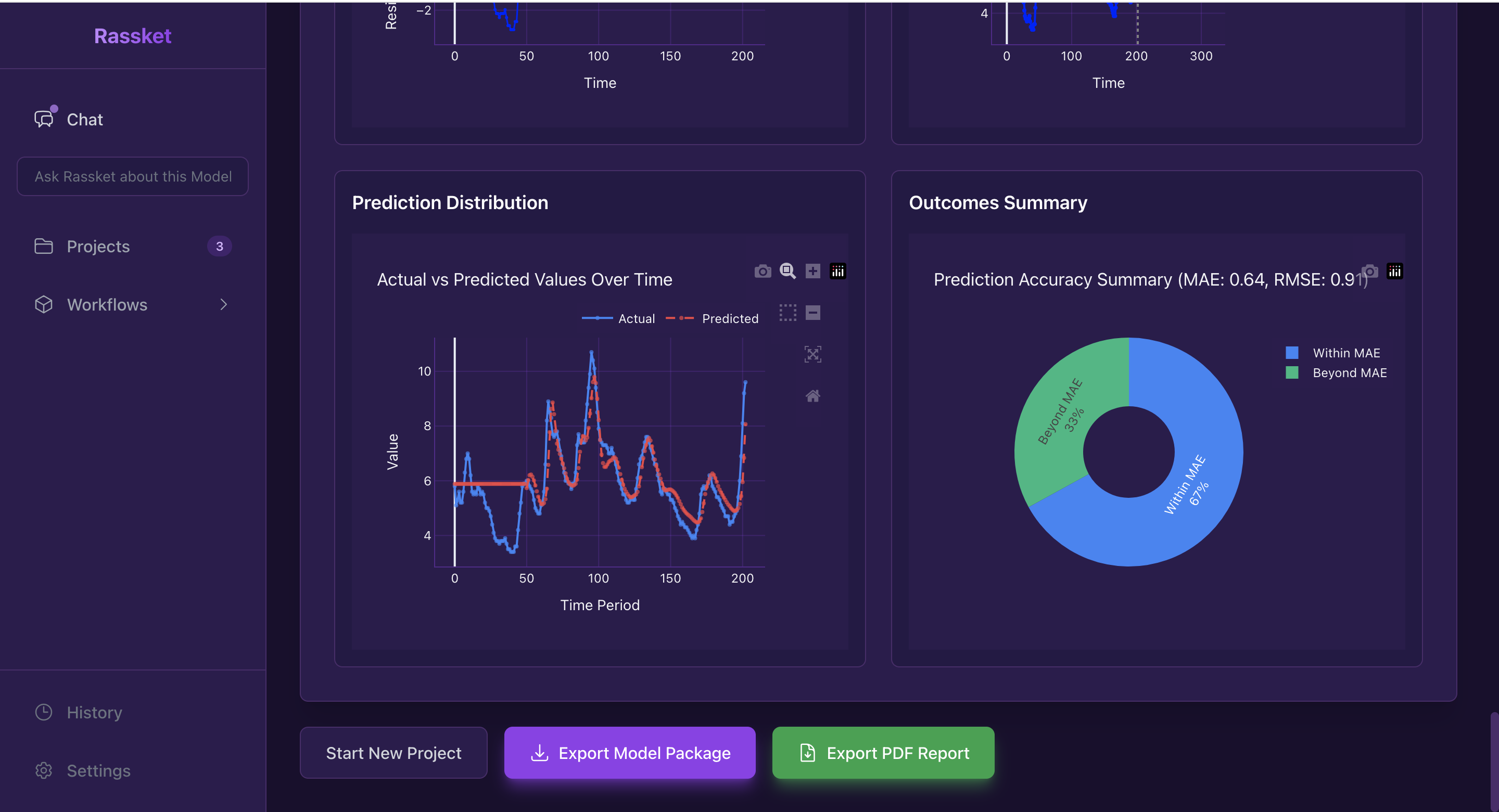

- Outcomes Summary: Pie chart of prediction outcomes

Why This Step Matters

Visualizations help you:

- Understand model behavior visually

- Identify potential issues (overfitting, bias, etc.)

- Communicate results to non-technical stakeholders

- Validate model assumptions

Interactive feature importance charts

Visual representation of model performance

Complete results with metrics and visualizations

Additional diagnostic charts and visualizations

Detailed model reasoning and analysis

Export model packages and PDF reports

Complete workflow summary and next steps

Step 9: Decision-Ready Outputs

What You See

- Export Model Package button

- Export PDF Report button

- Prediction interface (for making new predictions)

What the System Does

- Model Package Export: Creates a ZIP file containing:

- Trained model files

- Preprocessing pipeline

- Feature engineering code

- Model metadata

- Usage instructions

- PDF Report Export: Generates a comprehensive report including:

- Executive summary

- Model performance metrics

- Visualizations

- Feature importance analysis

- Domain-aware insights

- Recommendations

- Prediction Interface: Allows making predictions on new data

Why This Step Matters

Export capabilities ensure you can:

- Deploy models to production

- Share results with stakeholders

- Integrate predictions into your workflows

- Maintain model documentation

Workflow Summary

The complete Rassket workflow:

- Upload Data → CSV file with headers

- Select Domain → Energy or Research (with optional sub-domain)

- Analyze Data → Understand problem type and get recommendations

- Train Models → Automated model selection and hyperparameter tuning

- Evaluate Results → Comprehensive metrics and diagnostics

- Review Insights → Domain-aware explanations and analysis

- Explore Visualizations → Interactive charts and diagnostics

- Export Outputs → Model packages and reports

Next Steps

Now that you understand the workflow:

- Learn more about the AutoML Engine that powers training

- Understand Outputs & Artifacts in detail

- Explore Use Cases for inspiration

- Check the FAQ for common questions